Analysis

The Rise of AI Generated Hate Content

The views and beliefs expressed in this post and all Interfaith Alliance blogs are those held by the author of each respective piece. To learn more about the organizational views, policies and positions of Interfaith Alliance on any issues, please contact [email protected].

Grok, X’s artificial intelligence chatbot, went on a rampage last week, responding to user questions with antisemitic statements and at one point calling itself “MechaHitler.” Despite the posts being taken down and a statement from X that it was “taking action to ban hate speech,” Grok continued to make antisemitic statements later that week.

In a similar incident a couple months ago, Grok repeatedly made the claim that there was a white genocide occuring in South Africa, stating that it had been “instructed by my creators” to make those statements. Notably, Grok discussed this supposed genocide even in response to questions unrelated to the topic.

These incidents come amid an increase in hateful content online and a decrease in active moderation from social media companies. Advocacy groups have noted that antisemitic and Islamaphobic posts have risen dramatically, due in part to the conflict in the Middle East. Existing far-right platforms and accounts have used the conflict as a way to expand their reach beyond traditional right-wing audiences in an effort to gain more supporters for their hateful messages.

However, the incident with Grok illustrates a growing trend of using AI to create hate content, particularly antisemitic and Islamaphobic content, which is shared to a wide audience. Although companies say they have built safeguards into their AI tools, these safeguards aren’t always effective. For example, if the content an AI model is trained on is biased or prejudiced in some way, that can impact every response the AI returns to any query on any topic. Alternatively, individuals can creatively word questions to get hate content in response.

As these incidents show, the rise of AI can make hate speech even harder to moderate. Not only is it easier than ever to generate hate speech, but AI chat bots that disseminate hateful content often appear more reliable than traditional hate accounts. For that reason, minority communities, particularly minority religious communities, are increasingly under attack. In order to create a safe community for all individuals online, social media companies must be more transparent about the data used to train AI models and must be more proactive in combatting AI generated hate content on their platforms. Furthermore, governments need to take urgent action on regulating AI generated hate content to ensure that content moderation meets a baseline standard, ensuring that our digital spaces are welcoming to all.

Transcript

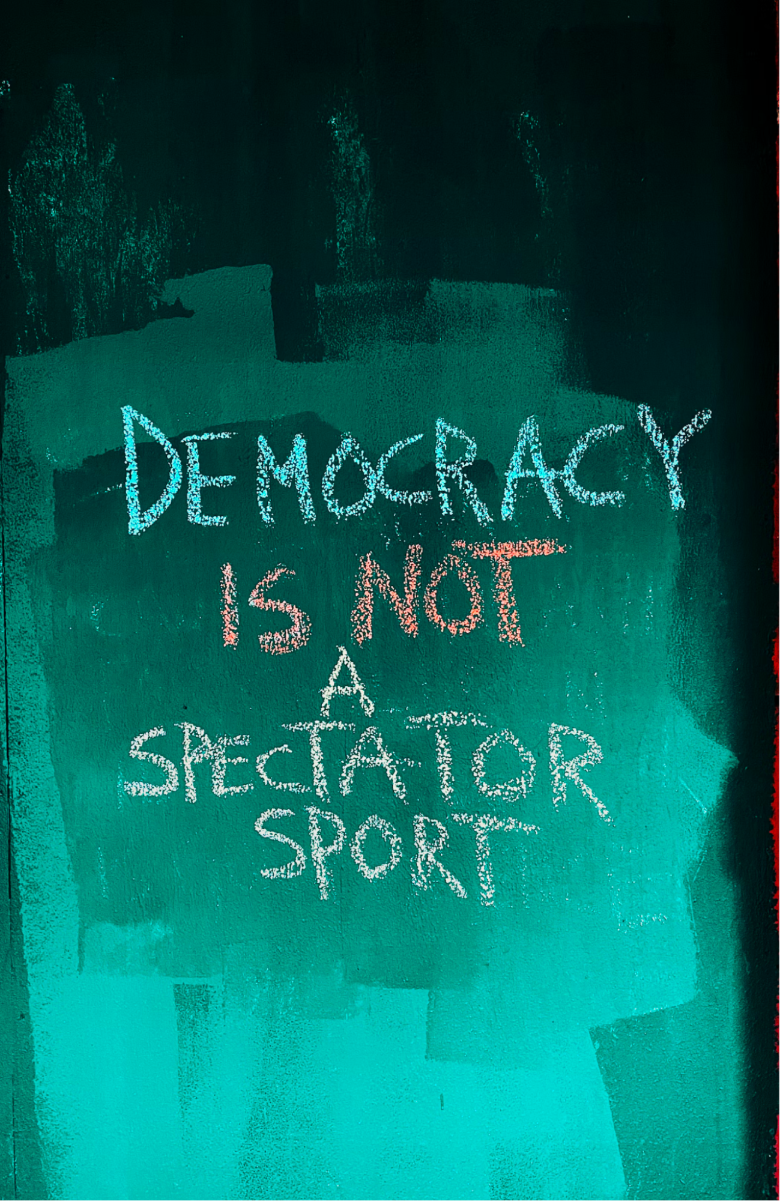

Pluralism is Democracy in Action

On July 4, America will mark 250 years since the signing of the Declaration of Independence. That day in 1776, the nation’s founders put forward a bold vision for a new democratic experiment, one rooted in shared values, with power derived from the people rather than imposed by a monarch or religious authority: